Introduction to AI Chips

AI chips are specialized hardware designed to efficiently handle the complex computational tasks required by artificial intelligence algorithms. These chips, which include Graphics Processing Units (GPUs), Field-Programmable Gate Arrays (FPGAs), and Application-Specific Integrated Circuits (ASICs), are essential for the development and deployment of advanced AI systems across various industries.

Types of AI Chips

Graphics Processing Units (GPUs)

GPUs excel in training AI models due to their parallel processing capabilities. Originally designed for graphics rendering, they can perform multiple calculations simultaneously, making them ideal for handling the intensive computations required in AI training.Field-Programmable Gate Arrays (FPGAs)

FPGAs offer flexibility as they can be reprogrammed after manufacturing to suit various tasks. They are particularly effective in applying trained AI algorithms to real-world data inputs, known as inference.Application-Specific Integrated Circuits (ASICs)

ASICs are custom-designed chips optimized for specific applications within AI. They provide superior performance compared to general-purpose processors and are often used in large-scale deployments where efficiency is critical.Neural Processing Units (NPUs)

NPUs are specialized chips that enhance CPUs’ ability to handle AI workloads, specifically designed for deep learning tasks like object detection and speech recognition.

Applications of AI Chips

AI chips play a crucial role across various industries:

Large Language Models (LLMs): Speed up the training and refinement processes necessary for developing advanced natural language processing tools.

Edge Computing: Enable smart devices to process data locally, reducing latency and improving security.

Autonomous Vehicles: Facilitate real-time decision-making by processing vast amounts of sensor data quickly.

Robotics: Enhance machine learning capabilities in robots for improved interaction with their environments.

Regorholding : Together We Innovate for a Sustainable Future

A Shared Vision for a Better Tomorrow

Fera’s partnerships with NVIDIA and AMD are strategic alliances that enable the company to offer a wide range of products to its customers.

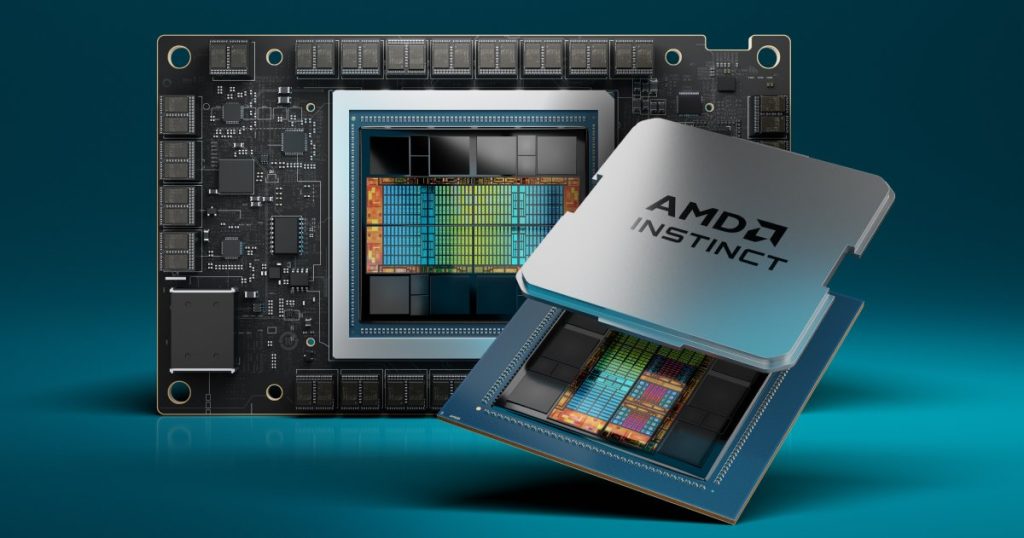

MI300X GPU

The AMD Instinct MI300X is a cutting-edge AI accelerator designed for high-performance computing (HPC) and generative AI workloads. It represents AMD’s latest advancements in chiplet architecture, combining multiple chiplets to achieve exceptional performance metrics.

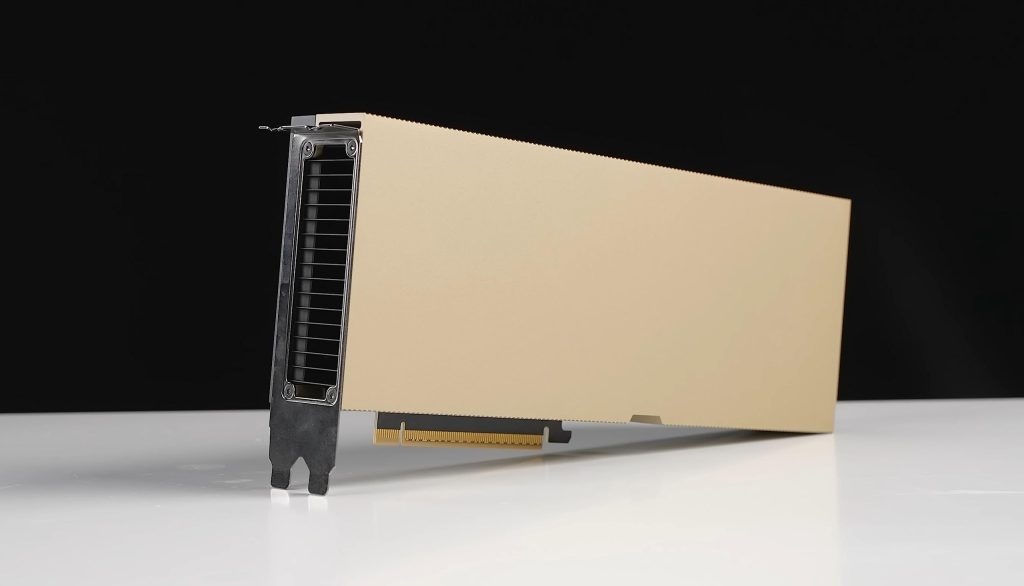

H100 graphic card

The NVIDIA H100 Tensor Core GPU is a state-of-the-art graphics processing unit designed for high-performance computing (HPC) and artificial intelligence (AI) workloads. Built on the NVIDIA Hopper architecture, it features significant advancements over its predecessor, the A100, making it particularly suited for large-scale AI model training and inference.

Future Trends in AI Chip Development

The demand for AI chips is expected to grow significantly as industries increasingly adopt artificial intelligence technologies. Innovations such as multi-die systems and advanced semiconductor architectures will continue to enhance the capabilities of these chips. Additionally, companies are exploring custom chip designs to reduce reliance on dominant suppliers like Nvidia.

Why AI Chips Matter

AI chips are critical in enabling faster and more efficient processing of large datasets, which is fundamental for advancements in areas such as:

- Large Language Models: Accelerating the training and refinement of models that power chatbots and virtual assistants.

- Edge Computing: Allowing devices like smartphones and IoT gadgets to process data locally, reducing latency and improving security.

- Autonomous Vehicles: Enhancing the capabilities of self-driving cars by enabling real-time data analysis from sensors